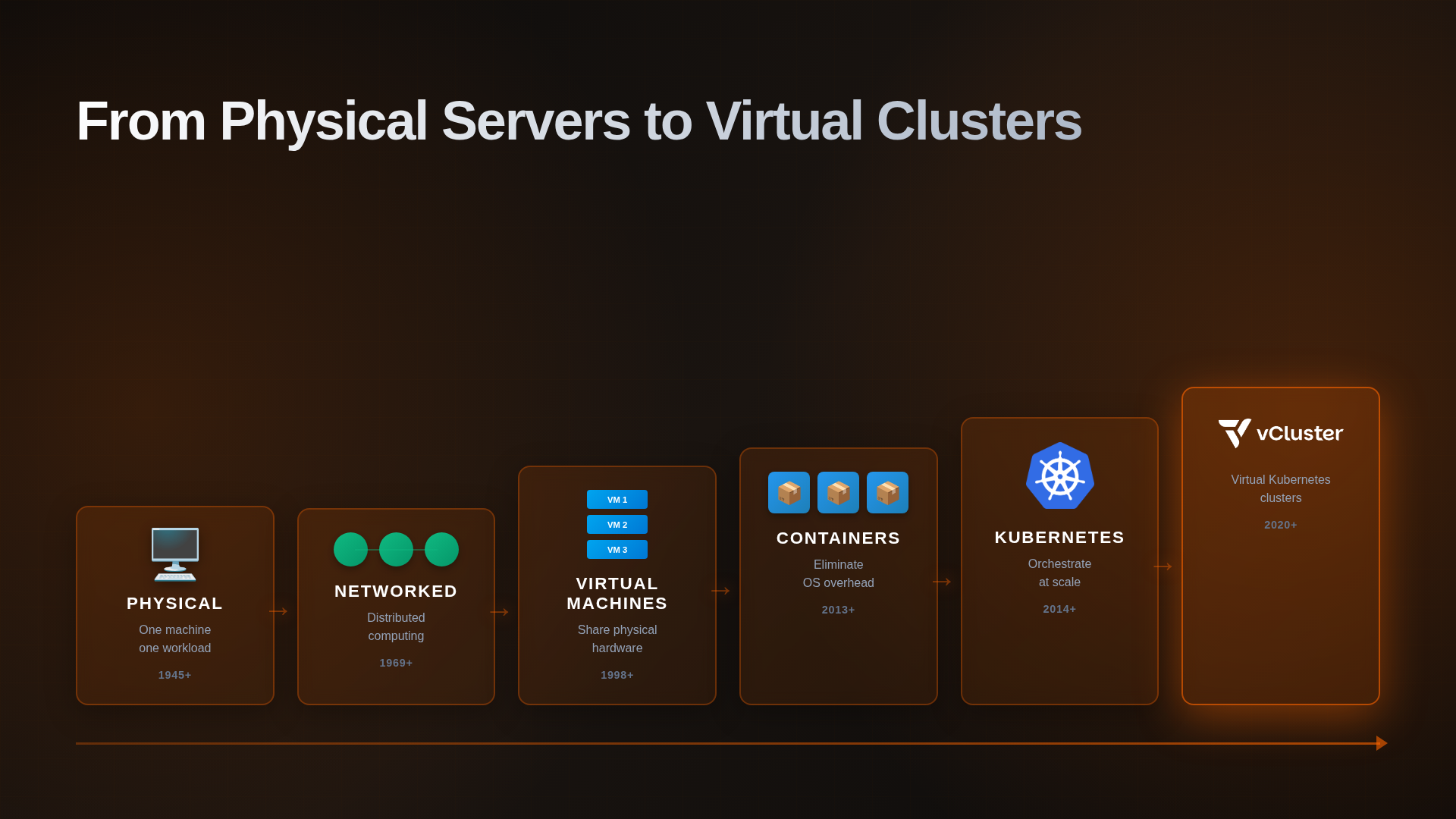

From Physical Servers to vCluster: Understanding Kubernetes Multi-Tenancy

TL;DR: This blog explains the evolution of computing abstractions from physical servers to virtual Kubernetes clusters.

Each layer solved a real problem while creating new challenges: physical computers led to VMs (stranded resources), VMs to containers (OS overhead), containers to Kubernetes (orchestration complexity), and Kubernetes to virtual clusters (multi-tenancy isolation).

vCluster enables teams to run fully functional virtual Kubernetes clusters inside existing infrastructure—providing control plane isolation that namespaces cannot match.

Last week I returned from KCD UK where I led a workshop introducing people to vCluster (try it yourself here). At the workshop and at our booth, we fielded dozens of questions from people with wildly different backgrounds.

Some attendees had deep Kubernetes expertise but had never heard of virtual clusters. Others worked with containers daily and were exploring the orchestration layer. People came from different knowledge bases and experience levels.

The most common question? "What is vCluster, and why would I need it?"

The short answer: vCluster is an open-source solution that enables teams to run virtual Kubernetes clusters inside existing infrastructure. These virtual clusters are Certified Kubernetes Distributions that provide strong workload isolation while running as nested environments on top of another Kubernetes cluster.

But to understand why that matters—and whether you need it—you need to see how we got here.